Computers are getting smarter, and tasks are getting bigger. But did you know running multiple jobs isn’t as simple as it sounds? That’s where parallel and concurrent processing come in. People often mix up these terms, but each works very differently, and knowing the difference can make your apps faster and smoother.

Modern systems often mix both. Concurrency manages tasks across multiple nodes, while parallelism runs them simultaneously. This combo powers everything from multi-core computers to large enterprise software such as Oracle E-Business Suite.

In this article, you will know these concepts simply, how they really work, and explore where each shines in the real world.

What is Concurrent Processing?

Concurrent processing means a system that can handle multiple tasks at the same time. It does not always run functions simultaneously. Instead, it switches between them very quickly.

This switching creates the impression that everything happens at once. It helps systems stay responsive, even when many tasks wait in line. You can see this in phones, apps, and large systems that manage many requests at once.

Characteristics Of Concurrent Processing

- Tasks Run in Overlapping Time: Concurrent processing lets tasks run in overlapping time. Tasks start, pause, and resume as needed, and the system switches between them quickly.

- Shared Resources Are Used: Many tasks share resources like memory or data. The system manages this shared access to avoid conflicts.

- Scheduling Controls Task Flow: Scheduling guides the entire process. The scheduler picks which task runs next and balances fairness and speed.

- Ideal for Interactive Systems: Concurrent processing is well-suited to interactive systems. It supports quick responses and handles many small tasks with ease.

- Tasks Only Appear to Run Together: Tasks do not always run simultaneously. They only need to appear active together. The system switches between them fast, creating a smooth multitasking effect.

- Better System Utilization: Concurrent processing boosts system utilization. Idle time drops when tasks overlap. The CPU stays productive, and resources are used efficiently, resulting in faster results.

What is Parallel Processing?

Parallel processing means running many tasks simultaneously. Your system breaks a job into smaller parts. Then it runs those parts together across multiple processors. This cuts the waiting time and boosts speed.

For parallel-processing examples, think of it like a team painting a house. One person can’t do everything fast. But a group can finish each wall at the same time. Parallel processing works in the same way. Computers use this method for heavy tasks. It helps with data analysis, video rendering, and large calculations.

Characteristics Of Parallel Processing

- Tasks Run at the Same Time: Parallel processing runs tasks at the same exact moment. Multiple processors work together to handle different parts of a job simultaneously.

- Requires Multiple Cores or Machines: It depends on hardware with multiple cores or nodes. Each core handles a separate task without switching.

- Tasks Work Independently: Tasks often run without depending on each other. Each task handles its own data and flow. This reduces conflicts and keeps the process fast and stable.

- Best for Heavy and Large Tasks: Parallel processing works well for large and intensive workloads. It handles complex calculations and big data operations with ease. This makes it useful in scientific computing, analysis, and simulations.

- Reduces Processing Time: It cuts long tasks into smaller pieces and runs them together. This reduces waiting time and improves efficiency. Users notice faster outputs and smoother performance.

- Needs Careful Coordination: Parallel tasks still need coordination. The system must combine results correctly. Good synchronization keeps everything accurate and prevents errors.

Benefits of Parallel Concurrent Processing

| Benefits of Parallel Concurrent Processing | Short Explanation |

| Environments that support PCP | It speeds task execution. It reduces wait time. It improves workload balance. It increases system reliability. |

| Cluster Environments | Many servers share tasks. One server can fail without stopping work. It offers high uptime. |

| Massively Parallel Environments | Many processors run tasks together. It handles large data fast. It scales well. |

| Homogeneous Networked Environments | All machines use the same setup. It ensures steady performance. It reduces errors and conflicts. |

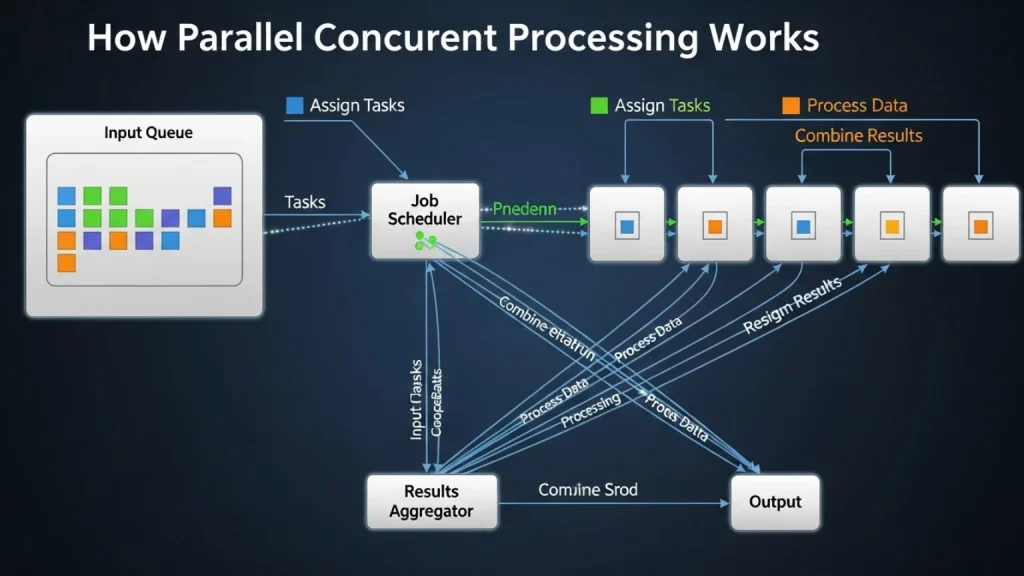

How Parallel Concurrent Processing Works

Parallel Concurrent Processing splits work across multiple nodes. It sends tasks to different machines at the same time. You get better performance because the load spreads evenly.

- Concurrent Managers

Concurrent managers handle the workload in Oracle E-Business Suite. They pick up requests and run them based on rules you set. They manage priorities, timing, and available resources. You can create different managers for different tasks. This gives you better control and smooth processing. It also helps you avoid delays during peak hours.

- Internal Concurrent Manager

The Internal Concurrent Manager controls all other managers. It decides which manager should run a job first. It checks the queue, assigns tasks, and keeps everything in order. It also restarts managers when needed. This process helps your system stay stable. You get a smoother workflow because nothing gets stuck in the queue.

- Internal Monitor Processes

Internal monitor processes track the health of each concurrent manager. They check if a manager stops responding. When that happens, they restart it automatically. This protects your workflow from interruptions. You don’t need to fix issues manually. The system handles it for you. This creates a more reliable processing environment.

- Log and Output File Access

Log and output files store details about each request. You can open them anytime to check results or errors. They help you understand what happened during processing. This makes troubleshooting quick and straightforward. You also get a clear view of completed tasks. It keeps your work transparent and easy to review.

- Integration with Platform-Specific Queuing and Load-Balancing Systems

PCP work with platform-level queuing and load-balancing tools. This integration spreads tasks across servers smoothly. It prevents overload on a single node. Your system stays stable even during heavy activity. It also increases processing speed. You gain better control, better performance, and a more flexible setup for changing workloads.

Parallel vs Concurrent: Key Differences

| Point | Parallel Processing | Concurrent Processing |

| Core Idea | It runs many tasks at the same time. | It handles many tasks, but not always at the same time. |

| Task Execution | Tasks run side by side. | Tasks switch quickly to share time. |

| System Requirement | It needs multiple CPU cores. | It works on single or multiple cores. |

| Speed Impact | It boosts speed for heavy tasks. | It improves responsiveness. |

| Best Use Case | Use it for tasks that are split into parts. | Use it when tasks depend on each other. |

| Resource Use | It uses more system power. | It uses fewer resources. |

| Example | Image processing or large data jobs. | Running apps, handling requests, or managing users. |

Parallel Concurrent Processing Environments in Oracle

Oracle’s Parallel Concurrent Processing (PCP) lets multiple tasks run at the same time. This means several concurrent managers can work across different nodes, and make your system faster and more efficient. It handles large workloads smoothly and reduces wait times for jobs that usually pile up.

Each concurrent manager in Oracle handles specific tasks. Some managers run regular jobs, while the Internal Concurrent Manager schedules and monitors everything to prevent conflicts. You can view active and pending tasks in the Concurrent Manager window, which makes it easy to track performance and fix issues quickly.

Managing Parallel Concurrent Processing in Oracle

Oracle lets you run multiple tasks at once, improving speed and efficiency. You can monitor all tasks easily.

- Defining Concurrent Managers: Set up managers for specific jobs or system processes. Each manager knows what tasks to handle.

- Administering Concurrent Managers: Keep an eye on performance, check queues, and fix issues fast. Admins control everything from one place.

- Starting Managers: Launch managers whenever you need them. They immediately start processing assigned jobs.

- Shutting Down Managers: Stop managers safely without losing work. This keeps the system stable and organized.

- Migrating Managers: Move managers to different nodes when needed. It balances workload across your system.

- Terminating a Concurrent Process: End a task if it hangs or causes problems. Termination frees resources for other jobs.

Final Words

Parallel and concurrent processing power up computers, but in unique ways. Parallel executes multiple tasks simultaneously, while concurrent juggles tasks by switching between them fast. Knowing the difference helps you design smarter systems and write more efficient programs. Both methods improve performance; understanding them puts you in control.

Frequently Asked Questions

Parallel runs tasks at the same time on multiple cores. Concurrent handles tasks by switching between them quickly.

Yes, parallel processing can be faster for tasks that can run simultaneously. Concurrency improves responsiveness but not always speed.

Yes, concurrent processing can run on a single core by quickly switching between tasks.

Use parallel for heavy tasks that can be split into parts. Use concurrent for tasks that need coordination or user interaction.

Parallel rendering videos or processing large datasets. Concurrent: handling web requests or multitasking apps.

Parallel increases speed by running multiple tasks at once. Concurrency focuses on task management, not raw speed.